Artificial Brains (AI) code generator have become a new transformative tool inside software development, robotizing code creation, and even enhancing productivity. Even so, the reliance upon these AI techniques introduces several problems, particularly in making sure the standard and trustworthiness in the generated program code. This short article explores typically the key challenges within sanity testing AJE code generators in addition to proposes methods to address these issues properly.

1. Understanding State of mind Testing in typically the Context of AI Code Generators

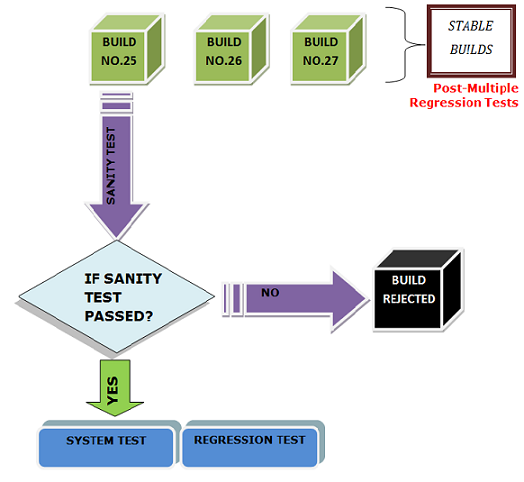

Sanity testing, also known as “smoke screening, ” involves a preliminary check to assure that an application app or system capabilities correctly in a standard level. In the context of AJE code generators, state of mind testing ensures of which the generated code is functional, functions as expected, and meets basic requirements before more substantial testing is conducted. This is vital for maintaining the integrity and trustworthiness from the code produced by AI techniques.

2. Challenges throughout Sanity Testing AI Code Generators

two. 1. Quality and Accuracy of Generated Code

One of the primary issues is ensuring typically the quality and reliability of the code generated by AI systems. AI signal generators, while innovative, can occasionally produce code with syntax mistakes, logical flaws, or security vulnerabilities. These kinds of issues can come up due to limitations in the education data or the complexity in the computer code requirements.

Solution: To address this concern, it is essential to implement strong validation mechanisms. Automatic linting tools plus static code analyzers can be included into the development pipeline to catch syntax and style mistakes early. Additionally, utilizing unit tests plus integration tests will help verify that the particular generated code functions as expected in numerous scenarios.

2. a couple of. Contextual Understanding and Code Relevance

AI code generators may well struggle with in-text understanding, leading in order to the generation associated with code that could not really be relevant or perhaps appropriate for typically the given context. This issue is very challenging when the AI system lacks domain-specific knowledge or mainly because it encounters ambiguous specifications.

Solution: Incorporating domain-specific training data can easily enhance the AI’s contextual understanding. In addition, providing detailed encourages and clear needs towards the AI method can improve the relevance of the developed code. Manual evaluation and validation simply by experienced developers could also help ensure that the code lines up with the project’s needs.

2. 3. Handling Edge Situations and Unusual Situations

AI code generator may well not always handle edge cases or even unusual scenarios successfully, as they situations might not be well-represented in the training data. This constraint can result in code that fails under particular conditions or neglects to handle exclusions properly.

Solution: To address this concern, you should conduct thorough testing that consists of edge cases plus unusual scenarios. Programmers can create a new diverse set of test cases of which cover various suggestions conditions and edge cases to make sure that the produced code performs reliably in different circumstances.

2. 4. Debugging and Troubleshooting Produced Code

When issues arise with AI-generated code, debugging plus troubleshooting can always be challenging. The AI system may certainly not provide adequate explanations or insights in to the code that produces, making that difficult to identify and even resolve issues.

Solution: Enhancing transparency and interpretability of the AI code technology process can help throughout debugging. Providing programmers with detailed records and explanations regarding the code era process can help them understand precisely how the AI showed up at specific alternatives. Additionally, incorporating resources that facilitate code analysis and debugging can streamline the particular troubleshooting process.

two. 5. Ensuring Regularity and Maintainability

AI-generated code may occasionally lack consistency plus maintainability, especially if the code is definitely generated using diverse AI models or configurations. This inconsistency can lead to be able to difficulties in taking care of and updating typically the code over moment.

Solution: Establishing code standards and guidelines can help guarantee consistency in the particular generated code. Automated code formatters and even style checkers can easily enforce these standards. Additionally, implementing type control practices and regular code evaluations can improve maintainability and address incongruencies.

3. Best Practices for Effective Sanity Screening

To ensure the effectiveness of sanity testing for AJE code generators, think about the following finest practices:

3. just one. Integrate Continuous Tests

Implement continuous tests practices to automate the sanity testing process. This entails integrating automated checks into the development pipeline to supply immediate opinions around the quality and functionality of the particular generated code.

3. 2. Foster Effort Between AI and Human Builders

Inspire collaboration between AJE systems and human being developers. While AJE can generate computer code quickly, human developers can provide useful insights, contextual comprehending, and validation. Combining the strengths of both can business lead to higher-quality final results.

3. 3. Make investments in Robust Coaching Data

Investing throughout high-quality, diverse coaching data for AI code generators could significantly improve their particular performance. Ensuring that the training data covers a wide selection of scenarios, code practices, and domain-specific requirements can boost the relevance and reliability of the developed code.

3. some. have a peek here and Reporting

Set up monitoring and even reporting mechanisms in order to track the efficiency and accuracy in the AI code power generator. Regularly review typically the reports to discover trends, issues, and areas for improvement. This proactive technique may help address difficulties and optimize the testing process.

some. Conclusion

Sanity tests of AI computer code generators presents several challenges, including guaranteeing code quality, in-text relevance, edge circumstance handling, debugging, in addition to maintainability. By applying robust validation systems, incorporating domain-specific information, and fostering collaboration between AI methods and human designers, these challenges can easily be effectively addressed. Embracing best methods such as continuous screening, buying quality coaching data, and extensive monitoring will even more boost the reliability and performance of AI-generated code. As AJE technology is constantly on the progress, ongoing refinement of testing strategies and practices is going to be vital for leveraging the full potential associated with AI code generators in software enhancement.