Introduction

In the evolving landscape of artificial intelligence (AI), code generation has emerged as a transformative technology. AI code generators, powered by sophisticated models like OpenAI’s GPT series, Google’s Bard, and others, offer the promise of automating software development and accelerating productivity. However, the performance of these AI tools is critical in determining their effectiveness and reliability. Performance testing of AI code generators ensures that they meet quality standards, produce accurate and efficient code, and integrate well within existing development environments. This article delves into the various aspects of performance testing for AI code generators, outlining methodologies, challenges, and best practices.

Understanding AI Code Generators

AI code generators utilize machine learning models, particularly those based on deep learning, to generate code from natural language descriptions or other inputs. These generators can assist in writing boilerplate code, suggesting improvements, and even creating complex algorithms. The accuracy and efficiency of these tools directly impact the quality of the generated code, making performance testing a crucial aspect.

Key Metrics for Performance Testing

Accuracy

Accuracy is perhaps the most critical metric. It measures how well the AI generator produces correct and functional code. Testing for accuracy involves:

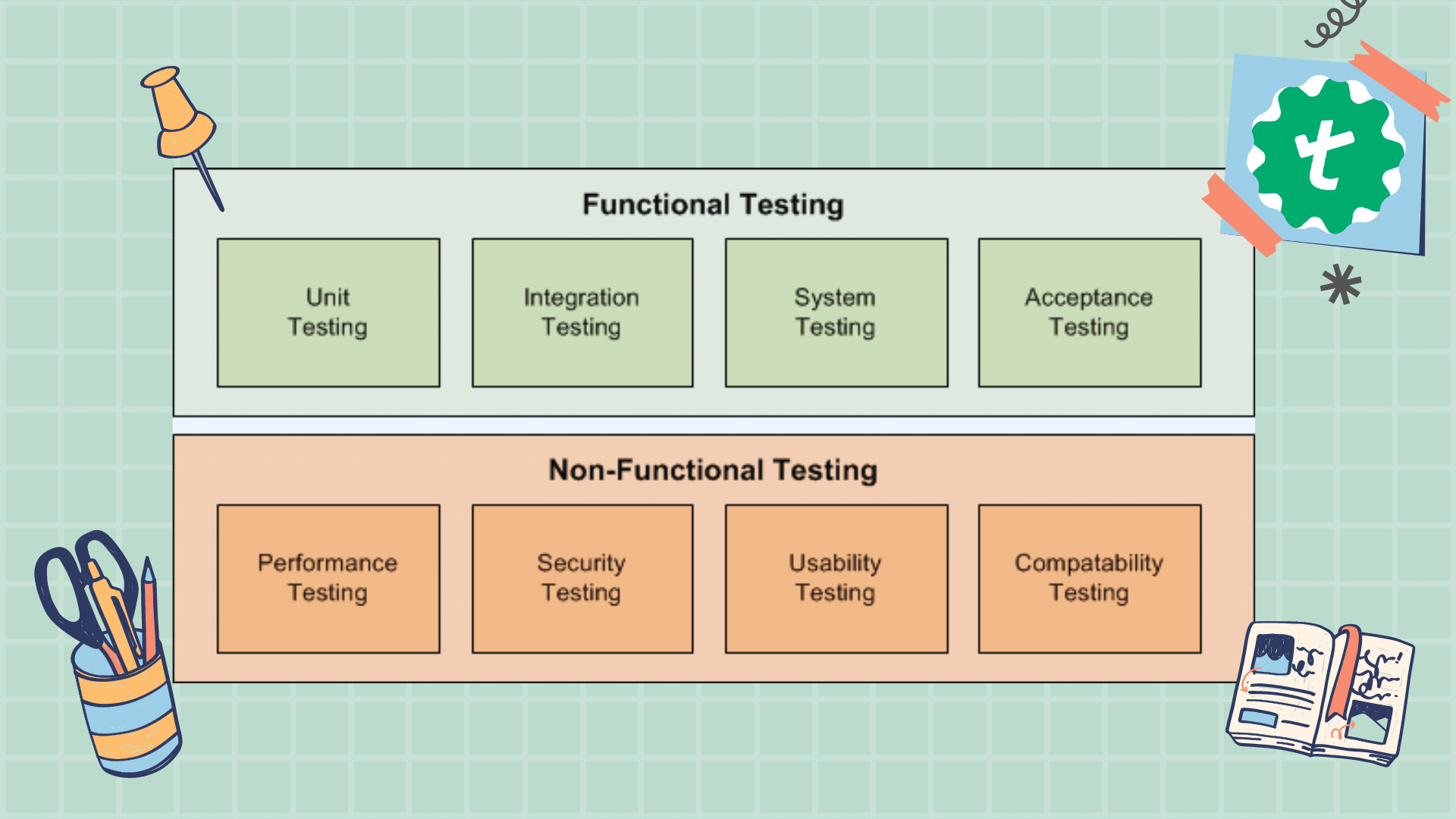

Functional Testing: Checking if the code fulfills the specified requirements and performs the intended functions correctly.

Edge Cases: Ensuring the code handles unusual or extreme conditions gracefully.

Efficiency

Efficiency tests focus on the performance of the generated code in terms of speed and resource usage. Metrics include:

Execution Time: How long the code takes to run.

Memory Usage: The amount of memory the code consumes during execution.

Scalability: How well the code performs as the input size increases.

Code Quality

Code quality encompasses readability, maintainability, and adherence to coding standards. Evaluation criteria include:

Clarity: Is the code easy to understand and follow?

Maintainability: Can the code be easily updated or modified in the future?

Adherence to Standards: Does the code comply with industry or organization-specific coding standards?

Robustness

Robustness testing assesses how well the AI-generated code handles errors and unexpected situations. Key aspects include:

Error Handling: How the code manages exceptions and errors.

Resilience: The code’s ability to recover from failures or incorrect inputs.

Methodologies for Performance Testing

Test Case Development

Developing a comprehensive set of test cases is crucial. Test cases should cover a range of scenarios, from simple tasks to complex operations, and include both typical and edge cases. These cases should be designed to evaluate all relevant metrics, such as functionality, performance, and error handling.

Automated Testing

Automated testing frameworks can streamline the performance testing process. Tools like Selenium for functional testing, JMeter for performance testing, and SonarQube for code quality analysis can be integrated into the testing pipeline to provide continuous feedback.

Benchmarking

Benchmarking involves comparing the performance of AI-generated code against known standards or other code sources. This comparison helps identify areas where the AI may fall short and provides a reference for improvement.

User Testing

Involving actual users in testing can provide valuable insights into the practicality and usability of the generated code. User feedback can highlight issues that automated tests may miss and offer a perspective on real-world application.

Challenges in Performance Testing

Complexity of AI Models

The complexity of AI models can make performance testing challenging. The variability in outputs, especially with models that generate diverse code snippets, can lead to difficulties in establishing consistent benchmarks.

Variability in Results

AI code generators may produce different results for the same input due to their inherent stochastic nature. This variability can complicate the assessment of accuracy and reliability.

Integration Issues

Testing how well AI-generated code integrates with existing systems and libraries can be complex. Ensuring compatibility and seamless integration is crucial for practical use.

Evolving Models

AI models are continually updated and improved. blog here needs to adapt to changes in the models, which may alter their behavior and outputs.

Best Practices for Effective Performance Testing

Define Clear Objectives

Set clear goals for what you want to achieve with performance testing. Define specific metrics and criteria for success, such as acceptable thresholds for accuracy and efficiency.

Use a Comprehensive Testing Suite

Develop a thorough testing suite that covers various aspects of performance, including functionality, efficiency, code quality, and robustness. Include automated tests for consistency and scalability.

Incorporate Real-World Scenarios

Test AI-generated code in real-world scenarios to assess its practical utility. This approach helps identify issues that may not be apparent in controlled testing environments.

Regularly Update Test Cases

Keep test cases up-to-date with changes in AI models and coding standards. Regularly review and revise test cases to ensure they remain relevant and effective.

Monitor and Analyze Results

Continuously monitor the performance of AI code generators and analyze test results to identify trends and areas for improvement. Use this data to refine the models and improve their performance.

Conclusion

Performance testing of AI code generators is a critical process that ensures the reliability, accuracy, and efficiency of these transformative tools. By focusing on key metrics such as accuracy, efficiency, code quality, and robustness, and employing effective methodologies and best practices, developers and organizations can maximize the benefits of AI in code generation. Despite the challenges, a well-structured performance testing approach can lead to more reliable and high-quality AI-generated code, paving the way for advancements in software development and productivity