In the realm regarding AI code technology, Python serves because the go-to encoding language for most developers and scientists. The rise of powerful frameworks such as TensorFlow, PyTorch, and Hugging Face’s Transformer remanufacture has made that easier than actually to design and even implement complex AJAI systems. However, with complexity comes the task of debugging. Debugging AI code is actually a vital skill, particularly because errors may manifest not just due to typical coding issues but also because of complexities in model structures, data handling, and even hardware integration (like GPUs). This content will explore popular debugging techniques particular to Python, along with a focus upon AI code generation.

1. Understanding the Importance of Debugging in AI Program code Generation

AI models, particularly in deep learning and healthy language processing, count on large datasets, sophisticated neural networks, plus extensive compute sources. try this website within the model architecture or dataset can easily propagate into major failures in the final output. In contrast to traditional software development where errors are often deterministic, AJAI development can entail non-deterministic errors as a result of issues like floating-point precision or unique initialization of weight loads. Thus, debugging within AI is both crucial and exclusive.

2. Common Mistakes in AI Computer code Generation

Before plunging into debugging approaches, it’s essential in order to identify the popular types of errors developers face throughout AI code era:

Syntax and Runtime Errors: These are basic coding issues that occur during the interpretation or perhaps execution of Python code.

Tensor Dimensions Mismatch: In serious learning, tensor styles must align correctly for matrix businesses. Mismatched dimensions are among the most common glitches in neural systems.

Data Preprocessing Concerns: Handling large datasets often leads to issues with wrong data normalization, lacking values, or improper tokenization for NLP tasks.

Memory Leakages and Overflow: AJE models can become memory-intensive, and inappropriate utilization of memory during training on GPUs or large datasets can cause the system to crash or perhaps freeze.

Training Insecurity: Errors can happen during model education, like vanishing or even exploding gradients, top to poor model performance or disappointment to converge.

a few. Techniques for Debugging AI Code throughout Python

3. just one Print Statements for Basic Debugging

The particular simplest and a lot commonly used method with regard to debugging Python computer code is usually to insert print out statements to track values and screen program execution. When this method may appear elementary, it’s amazingly effective for checking out many types regarding errors, especially in information preprocessing and through the particular forward pass inside AI models.

One example is, in a neural network model, an individual can print typically the shapes of tensors after each level:

python

Copy signal

def forward(self, x):

print(“Input shape: “, x. shape)

x = self. layer1(x)

print(“After layer1: “, x. shape)

back button = self. layer2(x)

print(“After layer2: “, x. shape)

come back x

This permits that you catch condition mismatches and other potential issues early on.

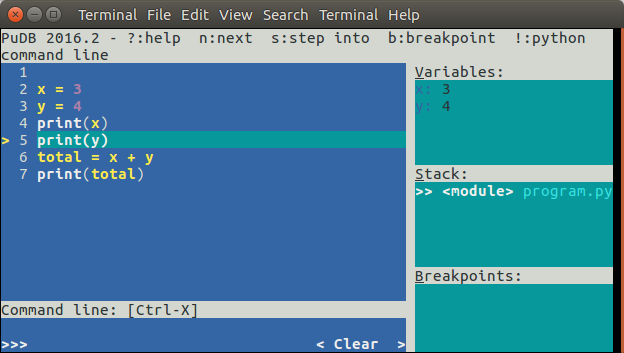

3. 2 Using Python Debugger (PDB)

The Python debugger (PDB) is surely an interactive tool that lets you inspect and control the execution of the program. It lets you set breakpoints, action through code, in addition to inspect variables with runtime. PDB is particularly useful for finding logic errors and even understanding how your AJE model behaves during training or inference.

To get started on PDB, place the subsequent line from the desired spot in your program code:

python

Copy code

import pdb; pdb. set_trace()

This range will pause the execution, allowing a person to step by means of the code, print variable values, in addition to resume execution. You can even set conditional breakpoints to halt the execution only any time specific the weather is attained.

3. 3 TensorBoard for Visual Debugging

TensorBoard is really an effective visualization tool utilized in TensorFlow plus PyTorch to screen model training, see metrics, and examine the model graph. It assists you keep track of the loss, accuracy, and even other performance metrics during the education process, making that easier to spot particularité such as immediate spikes in damage or stagnation in learning.

For instance, TensorBoard can visualize gradients to spot if that they are vanishing or perhaps exploding during backpropagation:

python

Copy code

from torch. utils. tensorboard import SummaryWriter

writer = SummaryWriter()

for epoch inside range(epochs):

# Training code

writer. add_scalar(‘Loss/train’, loss. item(), epoch)

writer. add_scalar(‘Accuracy/train’, reliability, epoch)

writer. close()

By examining the logs in TensorBoard, you can identify issues related to be able to training stability, any of these as improper studying rates or terribly initialized weights.

three or more. 4 Error Dealing with with Try-Except Prevents

In Python, try-except blocks are valuable for catching runtime errors and protecting against the program from crashes. For AI computer code generation, this method can be particularly helpful when working with external libraries, record handling, or fresh code that may possibly produce unexpected gains.

Here’s an instance of using try-except in an information loading function:

python

Copy code

try out:

data = load_data(file_path)

except FileNotFoundError because e:

print(f”Error: e. Look into the file way. “)

In AI applications, try-except obstructions are useful with regard to handling issues with GRAPHICS usage. As an example, in the event that you’re running program code on machines with varying hardware setups, you might desire to catch problems related to GPU availability:

python

Backup code

try:

gadget = torch. device(“cuda” if torch. cuda. is_available() else “cpu”)

except Exception because e:

print(f”Error although selecting device: e “)

3. your five Unit Testing and even Assertion Statements

While unit testing may appear more aligned along with traditional software growth, it’s highly necessary for AI projects. You may create tests to be able to validate small, flip components of the AI pipeline, this sort of as checking the correctness of data preprocessing steps or the output shapes involving model layers.

Declaration statements can also be used to be able to enforce certain conditions during execution. Regarding instance, when doing work with tensors, you can assert that this dimensions are right before proceeding:

python

Copy code

claim x. shape == (batch_size, num_features), f”Expected shape (batch_size, num_features), yet got x.shape “

By catching mistakes early with preuve, you can avoid hard-to-debug issues even more down the pipeline.

3. 6 Logging with regard to Tracking and Diagnosing Concerns

Logging will be a hotter alternative in order to print statements, especially in large-scale AJE applications where the design runs all night or days. Using Python’s built-in logging component, you can produce logs that track model performance, determine issues in typically the code, and supply useful context whenever errors occur.

With regard to example, you may established logging to capture loss plus accuracy metrics in the course of training:

python

Replicate code

import logging

logging. basicConfig(level=logging. INFO)

logger = logging. getLogger()

logger. info(f”Epoch epoch: Loss= loss, Accuracy= accuracy “)

Logging can also catch stack traces for unhandled exceptions, producing it easier to trace back the particular source of the particular issue without halting the execution of the program.

3 or more. 7 Profiling and Memory Usage Tracking

Memory leaks in addition to performance bottlenecks are common issues in AJAI code, especially if dealing with large datasets and complex types. Python offers resources like cProfile in addition to memory_profiler to recognize which parts of the code are sluggish or consume too much memory.

To profile your code, you can use cProfile:

python

Copy program code

import cProfile

cProfile. run(‘train_model()’)

For tracking memory usage, memory_profiler is an excellent tool that will can be mounted with pip. You are able to decorate functions to their memory ingestion:

python

Copy computer code

from memory_profiler importance profile

@profile

outl train_model():

# Training code

These equipment enable you to understand the particular resource using the AI models plus can guide optimizations to improve efficiency and scalability.

5. Debugging AI-Specific Libraries

Whenever using popular AJE libraries like TensorFlow, PyTorch, or Cradling Face Transformers, these libraries provide their own own debugging tools and error-handling mechanisms. For example:

PyTorch: PyTorch has built-in error messages which might be very informative in addition to point directly to the cause of the problem, especially when considering tensor dimension mismatches.

TensorFlow: TensorFlow gives runtime error looking at and eager delivery, which can support you catch issues during model building and training.

Transformer remanufacture: The Hugging Confront library comes along with extensive logging in addition to tracing mechanisms in order to help debug problems with NLP types and pipelines.

your five. Conclusion

Debugging AI code generation throughout Python can get a complex job, but with the right tools and methods, you can efficiently diagnose and fix issues. Techniques just like using print assertions, PDB, TensorBoard, signing, and memory profiling help developers maintain control over their very own code and ensure models run smoothly. Understanding these methods can easily drastically reduce debugging time and enhance the quality of AI projects, ultimately ultimately causing more reliable plus efficient models.